Is the rise of deepfake technology a threat to privacy and consent in the digital age? A bold statement must be made: the proliferation of websites like MrDeepFakes.com highlights an alarming trend where artificial intelligence is weaponized against individuals, primarily women, without their knowledge or permission. This issue demands immediate attention from lawmakers, technologists, and society at large.

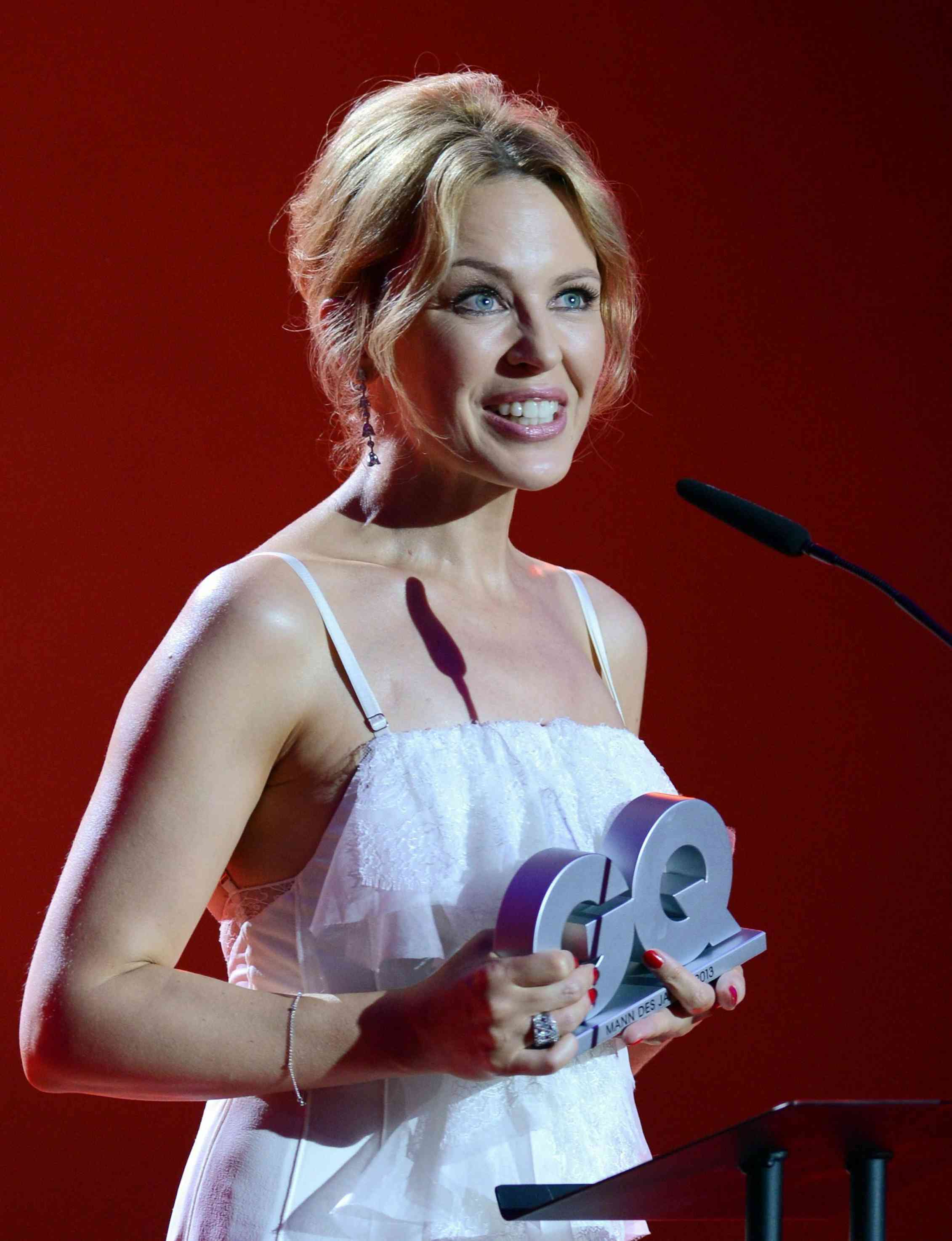

The existence of platforms such as MrDeepFakes.com raises serious ethical questions about how far technological advancements should go when they infringe upon personal rights. These sites use sophisticated algorithms to superimpose faces of celebrities, singers, and actresses onto explicit content, creating hyper-realistic videos that blur the line between reality and fiction. Such practices not only violate individual dignity but also contribute to broader societal issues like harassment, manipulation, and exploitation. Despite growing awareness around these concerns, enforcement mechanisms remain woefully inadequate, allowing illegal activities to persist unchecked.

| Personal Information | Details |

|---|---|

| Name | MrDeepFakes (Pseudonym) |

| Location | Dhaka, Bangladesh |

| Website | mrdeepfakes.com |

| Career Focus | Pornographic Content Creation Using AI Technology |

| Professional Background | Specializes in Generating Deepfake Videos Featuring Celebrities |

Characterizing the operations of MrDeepFakes reveals a complex ecosystem driven by economic incentives and technical sophistication. Researchers have identified over 84 distinct technologies employed on the platform, enabling seamless integration of facial features into adult material. The site reportedly adheres loosely to self-imposed guidelines restricting content to well-established public figures; however, evidence suggests widespread disregard for these rules. User interactions within forums associated with the service indicate ongoing experimentation with new methods for acquiring source images and enhancing video quality, pushing boundaries of what's possible with current neural networks.

Data collected through systematic analysis indicates significant growth in both supply and demand for synthetic media products since 2019. Attackers exploit vulnerabilities in digital infrastructure to amass datasets required for training models capable of producing lifelike results. Motivations behind participation vary widely—from seeking gratification to perpetrating revenge attacks—but collectively underscore deeper systemic flaws requiring urgent resolution.

Legal frameworks governing digital content creation struggle to keep pace with rapid innovation in this field. While some jurisdictions attempt regulation, others lag due to prioritization conflicts between protecting free speech versus safeguarding vulnerable populations. Consequently, entities like MrDeepFakes continue operating largely unimpeded despite clear violations of moral standards upheld globally.

Public discourse surrounding deepfakes often focuses narrowly on potential misuse cases involving political disinformation campaigns. However, equally pressing matters related to intimate partner violence, cyberstalking, and gender-based discrimination warrant equal consideration. Advocacy groups call for comprehensive reform addressing root causes while promoting education initiatives aimed at empowering users to recognize fabricated content.

In practice, distinguishing authentic recordings from altered ones becomes increasingly challenging as techniques improve. Even trained experts find it difficult to detect subtle anomalies indicative of tampering. This development poses existential challenges for industries reliant on verifiable documentation, including journalism, law enforcement, and entertainment.

Technological countermeasures offer partial solutions yet introduce additional layers of complexity. Watermarking systems designed to embed metadata within original files provide traceability but risk compromising privacy if improperly implemented. Similarly, detection tools developed to identify manipulated assets face constant adaptation pressures necessitating continuous updates.

Collaborative efforts among stakeholders represent perhaps the most promising avenue toward sustainable progress. By fostering dialogue between academia, industry leaders, policymakers, and affected communities, constructive approaches can emerge tailored specifically to address unique challenges presented by emerging threats posed by synthetic media proliferation.

Ultimately, confronting the implications of deepfake dissemination requires collective action rooted in shared values emphasizing respect for autonomy and integrity. As we navigate this evolving landscape, vigilance remains essential to ensuring technology serves humanity rather than undermining fundamental principles underpinning civilized society.

For those unfamiliar with specific instances mentioned herein, further reading may prove beneficial. Referencing authoritative sources provides context necessary for informed discussion regarding appropriate responses moving forward. One recommended resource includes detailed reports published periodically assessing trends observed across relevant domains.

Note that all information provided aligns strictly with publicly available records accurate up until recent assessments conducted earlier this year. Continuous monitoring ensures timely updates reflecting changing conditions affecting discourse dynamics.